Nebraska Newspapers

Nebraska Newspapers

Since 2007, the CDRH has been cultivating our web-based time machine, Nebraska Newspapers, in partnership with the Library of Congress’s National Digital Newspapers Program (NDNP) and funded by grants from the National Endowment for the Humanities (NEH). At time of writing, we currently have published 45 newspapers with full text and high resolution scan images. And there are more on the way after receiving our third NDNP grant in 2016.

Plattsmouth Papers

Upon ingest of the Plattsmouth, Nebraska newspapers, CDRH staff noted signs of a rift in the space-time continuum: some problems with the dates assigned to some of the newspaper issues. Papers from the early 1900s were miskeyed as being from the early 1800s — a time when few white settlers were living in Nebraska and certainly when no newspapers were being published here. These errors were not caught in the validation process, because the validator only checks to see if the date value has a valid date format, not whether the date is actually the correct one. Typos like this sound simple to fix, but changing the date across hundreds of filenames and within the XML of said files would take hours and hours to complete manually.

The Plattsmouth Public Libraries soon also noted that title shifts had not been identified. Plattsmouth has had two major newspapers, The Plattsmouth Herald and The Plattsmouth Journal, but both of these newspapers have undergone title changes throughout their history. According to standard cataloging practice, each newspaper title shift requires a unique Library of Congress Control Number (LCCN). Additionally, the NDNP guidelines require the use of print LCCNs, not those identifying the microfilm versions. Although the Plattsmouth papers had not been selected for inclusion in the NDNP, CDRH staff decided they should comply with the Library of Congress standards in hopes of being able to include the papers in Chronicling America in the future.

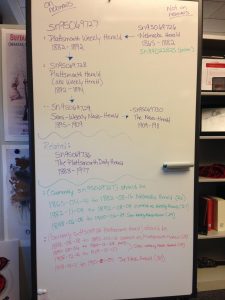

Given the information from the Plattsmouth Public Libraries, and armed with the US Newspaper Directory, our Metadata Encoding Specialist Laura Weakly investigated and sorted out what had been mixed up with the aid of her trusty whiteboard. Laura documented a few date ranges where LCCNs had been misapplied when the paper’s name had changed and identified where the microfilm LCCN had been used. Like correcting the dates, repairing these LCCN problems would involve similarly monumental amounts of manual work. Rather than undertake this time-consuming process, Laura approached the dev team about writing scripts to save time and minimize the possibility of new typos.

Python Scripts

To write the needed scripts, I decided to use our flux capacitor Python, because it powers the Library of Congress’s Chronicling America and other NDNP websites’ Chronam software, including Nebraska Newspapers. We usually use Bash or Ruby scripts for our projects, but I wanted this to be written in the language familiar to others working on NDNP projects. I had only written a small handful of Python scripts in the past, but I looked forward to refreshing my time-saving skills with it.

Fix Dates by LCCN

Source: fix_dates_by_lccn.py

I chose to start by focusing on the incorrect dates as it seemed a little less complex than updating LCCNs.

The script begins by using argparse to implement common command line options and the necessary arguments to control the script, similar to how I had begun using getopts for my Bash scripts and OptionParser for my Ruby scripts.

# Arguments

# ---------

parser = argparse.ArgumentParser()

# Optional args

parser.add_argument("-d", "--dry_run", action="store_true",

help="don't make any changes to preview outcome")

parser.add_argument("-q", "--quiet", action="store_true",

help="suppress output")

parser.add_argument("-s", "--search_dir",

help="directory to search (default: /batches)")

parser.add_argument("-v", "--verbose", action="store_true",

help="extra processing information")

# Positional args

parser.add_argument("lccn", help="LCCN to be fixed")

parser.add_argument("bad_date", help="incorrect date")

parser.add_argument("new_date", help="corrected date")

args = parser.parse_args()

This allows us to execute the script and do a dry run to verify what files it finds from our arguments with more verbose output without modifying any files before we run it again in quiet mode and let it silently work its magic for us:

./fix_dates_by_lccn.py -dv sn95069723 1821 1921

Searching /batches/

Search for bad dates in batch_pm@delivery2_ver01/data/sn95069723

Search for bad dates in /00000000036/1821111001

Fix date in file 0045.xml

Fix date in file 0042.xml

Fix date in file 0047.xml

Fix date in file 1821111001.xml

Replace 1821 in file name with 1921

Fix date in file 0044.xml

Fix date in file 0043.xml

Fix date in file 0046.xml

Replace 1821 in dir name with 1921

Update dates in batch XML covering sn95069723

...

./fix_dates_by_lccn.py -q sn95069723 1821 1921

The script modifies the date arguments and stores them in each of the formats necessary to use in matching filenames and XML strings. Then the script calls functions to find and collect the directory paths corresponding to the LCCN argument and the directories within which match the incorrect date argument. It accomplishes this by calling os.walk.

After gathering the desired directory paths, the script identifies which files were ALTO XML or METS XML and uses the ElementTree XML module to read and modify them. Special care is needed here to preserve some parts of the XML documents.

XML Namespaces

In our original XML file, we see the list of namespaces at the top of the file:

<mets TYPE="urn:library-of-congress:ndnp:mets:newspaper:issue" PROFILE="urn:library-of-congress:mets:profiles:ndnp:issue:v1.5" LABEL="The Plattsmouth Journal, 1821-10-20" xmlns:mix="http://www.loc.gov/mix/" xmlns:ndnp="http://www.loc.gov/ndnp" xmlns:premis="http://www.oclc.org/premis" xmlns:mods="http://www.loc.gov/mods/v3" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:xlink="http://www.w3.org/1999/xlink" xmlns="http://www.loc.gov/METS/" xsi:schemaLocation=" http://www.loc.gov/METS/ http://www.loc.gov/standards/mets/version17/mets.v1-7.xsd http://www.loc.gov/mods/v3 http://www.loc.gov/standards/mods/v3/mods-3-3.xsd" >

For the script to understand and retain namespaces, one must register all of the namespaces used within a file with ElementTree before it parses the XML:

# Set namespaces before parsing

ET.register_namespace("", "http://www.loc.gov/METS/")

ET.register_namespace("mix", "http://www.loc.gov/mix/")

ET.register_namespace("ndnp", "http://www.loc.gov/ndnp")

ET.register_namespace("premis", "http://www.oclc.org/premis")

ET.register_namespace("mods", "http://www.loc.gov/mods/v3")

ET.register_namespace("xsi", "http://www.w3.org/2001/XMLSchema-instance")

ET.register_namespace("xlink", "http://www.w3.org/1999/xlink")

ET.register_namespace("np", "urn:library-of-congress:ndnp:mets:newspaper")

The namespace for the <structMap> element further down in the file is not preserved by ElementTree though, so we manually re-add it:

# Restore structmap namespace that ET doesn't write

file = fileinput.FileInput(file_path, inplace=1)

for line in file:

print line.replace('<structMap>', '<structMap xmlns:np="urn:library-of-congress:ndnp:mets:newspaper">'),

XML Comments

There are a few comments scattered throughout each XML document. I learned that retaining XML comments with ElementTree requires defining a custom XML parser:

# XML parser to retain comments

class CommentRetainer(ET.XMLTreeBuilder):

def __init__(self):

ET.XMLTreeBuilder.__init__(self)

# assumes ElementTree 1.2.X

self._parser.CommentHandler = self.handle_comment

def handle_comment(self, data):

self._target.start(ET.Comment, {})

self._target.data(data)

self._target.end(ET.Comment)

# Parse XML using custom parser

tree = ET.parse(file_path, parser=CommentRetainer())

XML Rewriting and Name Updates

With ElementTree, XML element attributes are usually rewritten in a different order than in the original file, but thankfully this is one area where XML is flexible and remains valid. Once the XML is rewritten, the script updates file and directory names.

Date and LCCN String Management

I didn’t realize until I had spent a fair amount of time testing the functionality above that it was also necessary to update the issue records within the batch XML files. The tricky part of that was extracting the tail of the incorrect date path including the LCCN to match against the corresponding strings in the XML. There are a lot of date and LCCN strings to manage throughout this process.

# Determine batch file and bad date paths

batch_path_re = re.compile('^(.+)\/sn[0-9]{8}\/')

batch_path = batch_path_re.match(bad_date_path).group(1)

batch_files = [os.path.join(batch_path, "batch.xml"), os.path.join(batch_path, "batch_1.xml")]

bad_date_path_tail_re = re.compile(".+\/({0}\/.+)$".format(lccn))

bad_date_path_tail = bad_date_path_tail_re.match(bad_date_path).group(1)

Fix LCCN by Date

Source: fix_lccn_by_date.py

Very similarly, this script begins by handling command line options, necessary arguments, and finding the paths to the directories and files out of order. Updating the METS XML files doesn’t differ much either.

Things get interesting and complicated though when the script needs to track which related reel files are copied and deleted, and whether the changes empty the directory of the incorrect LCCN. Removed reel files and emptied directories have to be removed when updating the batch XML file as well. Moved reels also have to be inserted in the correct order in the batch XML file.

# Add copied reel to batch reels

if not copied_reel_added:

if not args.quiet and not batch_file[-6:] == "_1.xml":

print " Adding copied reel {0} to batch XML".format(reel_copied)

reel_number = reel_copied.split('/')[1]

reel_element = ET.Element("reel", {"reelNumber": reel_number})

reel_element.text = reel_copied +'/'+ reel_number +'.xml'

reel_element.tail = '\n\t'

if copied_reel_index:

root.insert(copied_reel_index, reel_element)

else:

root.append(reel_element)

Testing the Scripts

While writing the scripts, I manually identified and downloaded a small handful of XML files from the affected newspapers from the server without their accompanying high resolution scan images. I kept an unmodified set of the files and repeatedly copied them to another location, ran the scripts on them, evaluated the output, and deleted them. In hindsight, I could have just made a temporary Git repository and reset or checked out the unmodified files after each time I ran the scripts on them. But this slip of the mind didn’t cost me much time.

Downloading Bulk Newspaper Files

When I felt the scripts were ready to test on the entire corpus of Plattsmouth papers, I researched how to exclude the image files so I could save time by downloading approximately 4GB of files instead of 300GB. I found an rsync option that provided exactly what I was looking for:

rsync -ahu --info=progress2 --exclude '*.jp2' server_name:/batches/pm_delivery* .

Logical Volume Manager (LVM) Snapshots

Even after excluding the image files, re-copying the 4GB of XML files would be too time consuming to do after each time I ran the scripts on them. If I had thought to use Git, this may have been simpler to accomplish. I write that hesitantly because with this much data, even Git may have been significantly slower. I had been looking for a good reason to learn to use LVM snapshots since I had read about them a few years ago and have partitioned my disks for the possibility since. More specifically, I have been partitioning using LVM thin-provisioning, also referred to as LVM2.

Even after excluding the image files, re-copying the 4GB of XML files would be too time consuming to do after each time I ran the scripts on them. If I had thought to use Git, this may have been simpler to accomplish. I write that hesitantly because with this much data, even Git may have been significantly slower. I had been looking for a good reason to learn to use LVM snapshots since I had read about them a few years ago and have partitioned my disks for the possibility since. More specifically, I have been partitioning using LVM thin-provisioning, also referred to as LVM2.

If you’re unfamiliar, I recommend reading the Red Hat documentation on Snapshot Volumes and Creating Snapshot Volumes. Here is a brief description and their particular use in this situation:

The LVM snapshot feature provides the ability to create virtual images of a device at a particular instant without causing a service interruption.

…

Because the snapshot is read/write, you can test applications against production data by taking a snapshot and running tests against the snapshot, leaving the real data untouched.

This feature requires a relatively modern Linux system and the necessary underlying LVM2 partitioning scheme. I created my thin-provisioned snapshot and mounted it with the following commands:

lvcreate -s -kn --name newspapers_snapshot data/newspapers mount /dev/data/newspapers_snapshot /data/newspapers_snapshot

I then ran my scripts on the files in /data/newspapers_snapshot. After the scripts would finish, I could nearly instantly both delete the snapshot which had been modified and create another snapshot, repeating as needed. To be even more thorough, I later downloaded the full 300GB data set. Applying the LVM snapshot process was still lightning fast with all the full-size page images.

A cursory search shows that Windows provides a similar snapshot feature via Shadow Copy and Mac OS users may gain the capability with the third-party software Paragon Snapshot. I haven’t read much into or tried them though, so I can’t say whether they work as well or not.

Updating Production Files

After I was convinced that everything was working correctly, I copied the scripts to the production server and tried to run them. But I had provided the wrong fuels for the flux capacitor!

Python 2.6

I developed the scripts on my Fedora Workstation desktop, but the production server runs the latest CentOS 6 release which uses Python 2.6. Thankfully the only change I had to make was to add positional argument specifiers to the replacement fields in the formatted strings I was passing to the print function.

print " Could not find batches with bad date {}".format(bad_date) # Python 2.7

print " Could not find batches with bad date {0}".format(bad_date) # Python 2.6

ElementTree Module

The version of the ElementTree module on CentOS 6 differed from the version on my desktop as well, so some of the functions the script calls don’t exist there. I learned that Python will first search in the directory of the script being executed for modules imported, so I tried copying the module files from my workstation to the scripts’ directory on the server. Great Scott! It worked!

And that was how we prevented a paradox that could have caused a chain reaction that would unravel the very fabric of the space-time continuum and destroy the entire universe… or at least could have left some inaccuracies in the Plattsmouth newspapers’ history.

Many thanks to Laura Weakly, Karin Dalziel, and Jessica Dussault for their proofreading and feedback on this post.